A recent study from neuroscience researchers at the University of Geneva (UNIGE) and the University Hospitals of Geneva (HUG) has explored the possibility of internal language decoding in individuals deprived of self-expression. Their findings have identified promising neural signals to capture internal monologues, as well as brain areas to be prioritized in future observations. Results of this study bring engaging new perspectives and favorable steps toward the development of interfaces for people with aphasia.

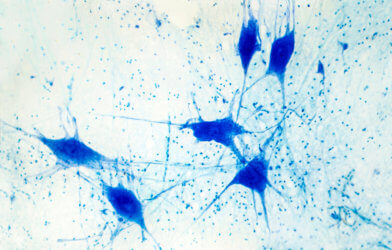

Speech activates different regions of the human brain, but damage to the nervous system can result in major impairment to the function of those areas. In one example, some patients with amyotrophic lateral sclerosis (ALS) have been left with full paralysis of the muscles used in speech.

Other examples of nervous system triggered speech impairment include the development of aphasia after a stroke, although some patients retain partial ability to imagine words and sentences. Deciphering internal speech is a very complex and difficult task, but its role in understanding conditions like aphasia makes it of key importance to neuroscience researchers.

“Several studies have been conducted on the decoding of spoken language, but much less on the decoding of imagined speech,” says Timothée Proix, scientist in the Department of Basic Neuroscience at the UNIGE Faculty of Medicine, in a statement. “This is because, in the latter case, the associated neural signals are weak and variable compared to explicit speech. They are therefore difficult to decode by learning algorithms.”

When people speak out loud, they produce sounds that are emitted only at specific times. This makes it possible for researchers to connect these sounds to the regions of the brain involved. It is not as simple to do this when dealing with imagined speech, leaving scientists with no clear understanding of the sequencing and tempo of words and sentences formulated internally. Additionally, the areas in the brain recruited for this process are fewer in number and not as active.

Examining the brain while patient imagine words

In their study, UNIGE researchers collaborated with two American hospitals to gather a panel of thirteen hospitalized patients. Using electrodes directly implanted in each patient’s brain, the team gathered data to be used in assessing their epileptic disorders.

“We asked these people to say words and then to imagine them. Each time, we reviewed several frequency bands of brain activity known to be involved in language,” said Anne-Lise Giraud, a professor in the Department of Basic Neuroscience at the UNIGE Faculty of Medicine, newly appointed director of the Institut de l’Audition in Paris and co-director of the NCCR Evolving Language.

Results showed many kinds of frequencies produced by different brain areas occurring when patients spoke words and when they imagined them.

“First of all, the oscillations called theta (4-8Hz), which correspond to the average rhythm of syllable elocution. Then the gamma frequencies (25-35Hz), observed in the areas of the brain where speech sounds are formed,” explains Pierre Mégevand, assistant professor in the Department of Clinical Neurosciences at the Faculty of Medicine of the UNIGE and associate physician at the HUG. “Thirdly, beta waves (12-18Hz) related to the cognitively more efficient regions solicited, for example, to anticipate and predict the evolution of a conversation. Finally, the high frequencies (80-150Hz) that are observed when a person speaks out.”

Using the data collected in their study, researchers were able to demonstrate that low frequencies and the pairing of other particular frequencies (most notably beta and gamma) offer critical insight for decoding internal speech. The team also found an area of the brain they consider significant for the future decoding of imagined speech: the temporal cortex. The temporal cortex is found in the left lateral region of the brain and is used to process information related to hearing and memory, as well as housing a section of Wernicke’s area, which is used to process words and other language symbols.

“We are still a long way from being able to decode imagined language,” the research team said. Researchers believe that their findings are a significant step forward in the process of reconstructing speech using brain activity. Greater understanding in this area, they believe, may lead to new and successful treatments for aphasia patients.

“But we are still a long way from being able to decode imagined language,” they concluded.

This study is published in Nature Communications.

Article written by Anna Landry